DDIA - Data Driven Immersive Analytics

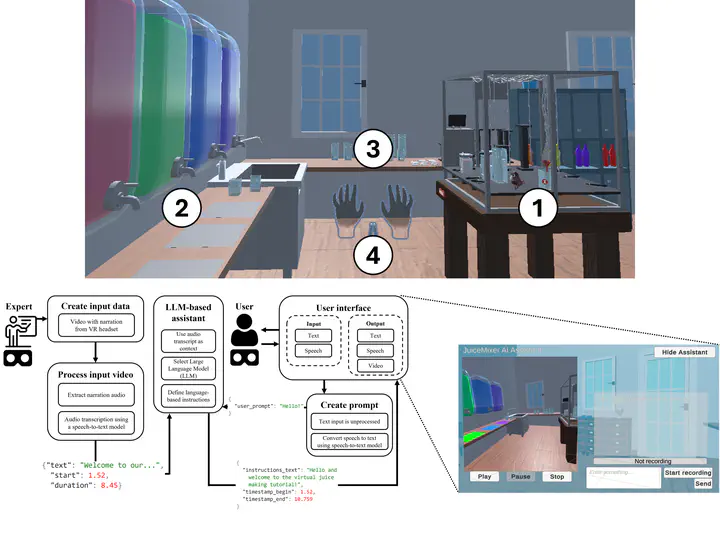

Immersive AI assistant for virtual juice mixing, highlighting VR interaction, system design, and multimodal instructions.

Immersive AI assistant for virtual juice mixing, highlighting VR interaction, system design, and multimodal instructions.

Project Overview (October 2023 – June 2026)

The DDIA (Data Driven Immersive Analytics) project is a COMET-funded initiative aimed at improving immersive analytics and digital twin interactions for industrial applications. Digital twins—interactive digital representations of real-world objects—are vital tools for remote collaboration, operational support, and training. However, the usability and intuitiveness of current immersive methods often fall short, creating barriers to effective interaction.

Detailed project information can be found on the official DDIA project website and the FFG COMET factsheet.

Objectives

DDIA’s key goals are:

- Enhancing immersive interaction paradigms and analytics.

- Improving remote collaboration and training through intuitive AR/VR interfaces.

- Personalizing user experiences by integrating physiological sensing data.

Technical Challenges

Key challenges addressed in this project include:

- Creating seamless and intuitive interactions with digital twins in immersive environments

- Integrating large language models with real-time AR/VR applications while maintaining performance

- Developing effective multimodal interfaces combining speech, gesture, and traditional input methods

- Processing and utilizing physiological data to adapt interfaces in real-time

- Ensuring cross-platform compatibility between different AR/VR hardware ecosystems

- Balancing computational requirements of AI assistants with the performance constraints of mobile VR/AR devices

Technologies and Methods

In DDIA, advanced AI and immersive technologies include:

- Large Language Models: Integration of GPT (OpenAI), LLaMA (Meta), Claude (Anthropic), DeepSeek, and Qwen (Alibaba) to build intelligent AI assistants for machine operation and training.

- Unity-based AR/VR Development: Interfaces developed for Meta Quest and Microsoft HoloLens to facilitate immersive, interactive experiences with digital twins.

- Speech Interaction: OpenAI Whisper for speech-to-text and text-to-speech, enabling natural voice-driven interactions.

- Physiological Sensing: Capturing physiological data to personalize interactions and enhance user comfort and efficiency.

- Meta Aria Glasses: Expert operation data collection to refine future training and interaction paradigms.

Personal Contribution

My involvement in DDIA includes:

- Designing and developing immersive AR/VR interfaces in Unity for Meta Quest and Microsoft HoloLens.

- Implementing AI assistants powered by advanced large language models.

- Integrating OpenAI Whisper to provide robust and intuitive speech interaction.

- Analyzing immersive and physiological data to enhance personalized interactions and improve collaborative workflows.

Through these activities, DDIA aims to deliver user-friendly, personalized digital experiences, significantly advancing remote collaboration, support, and training in industrial settings.